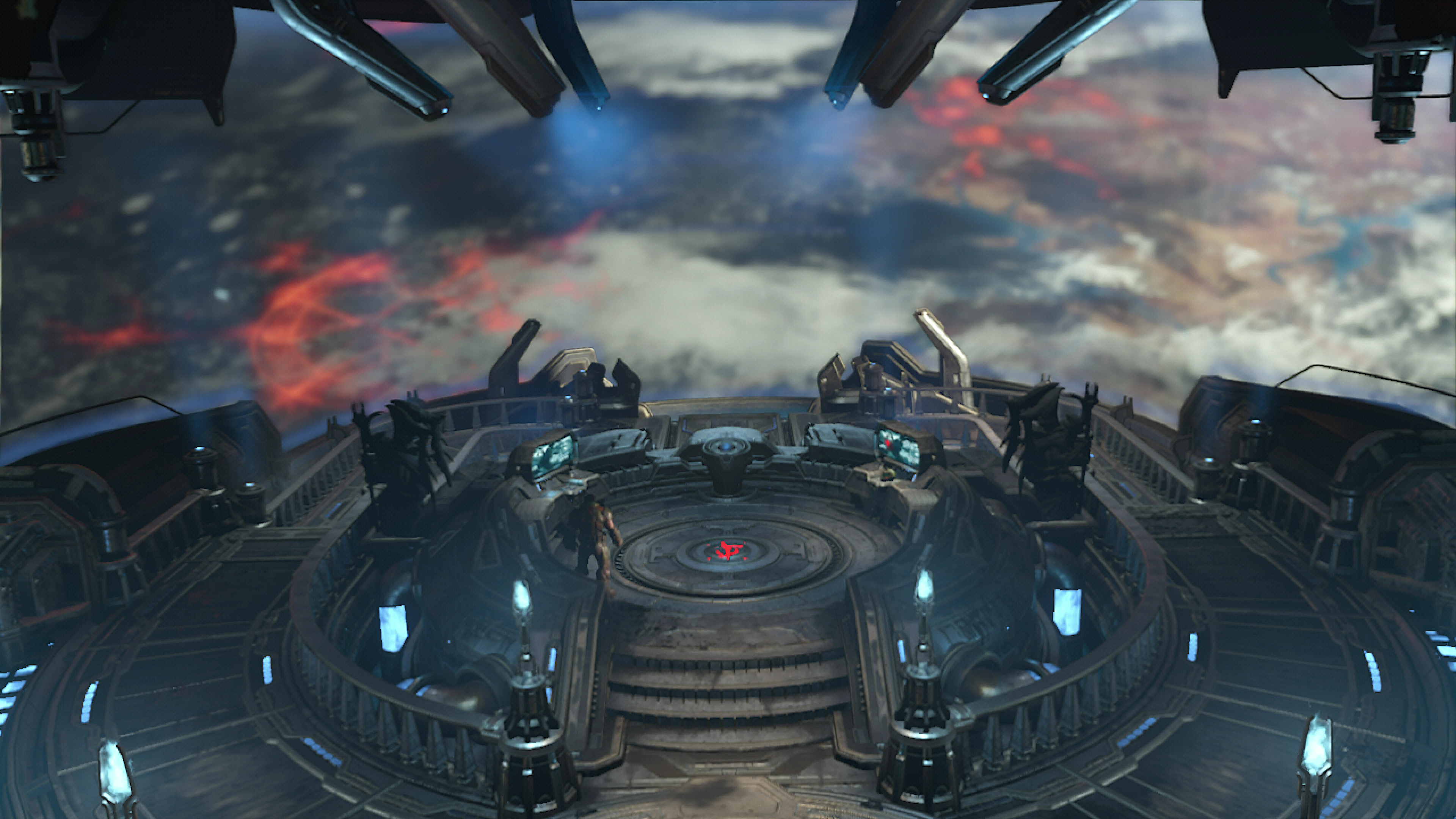

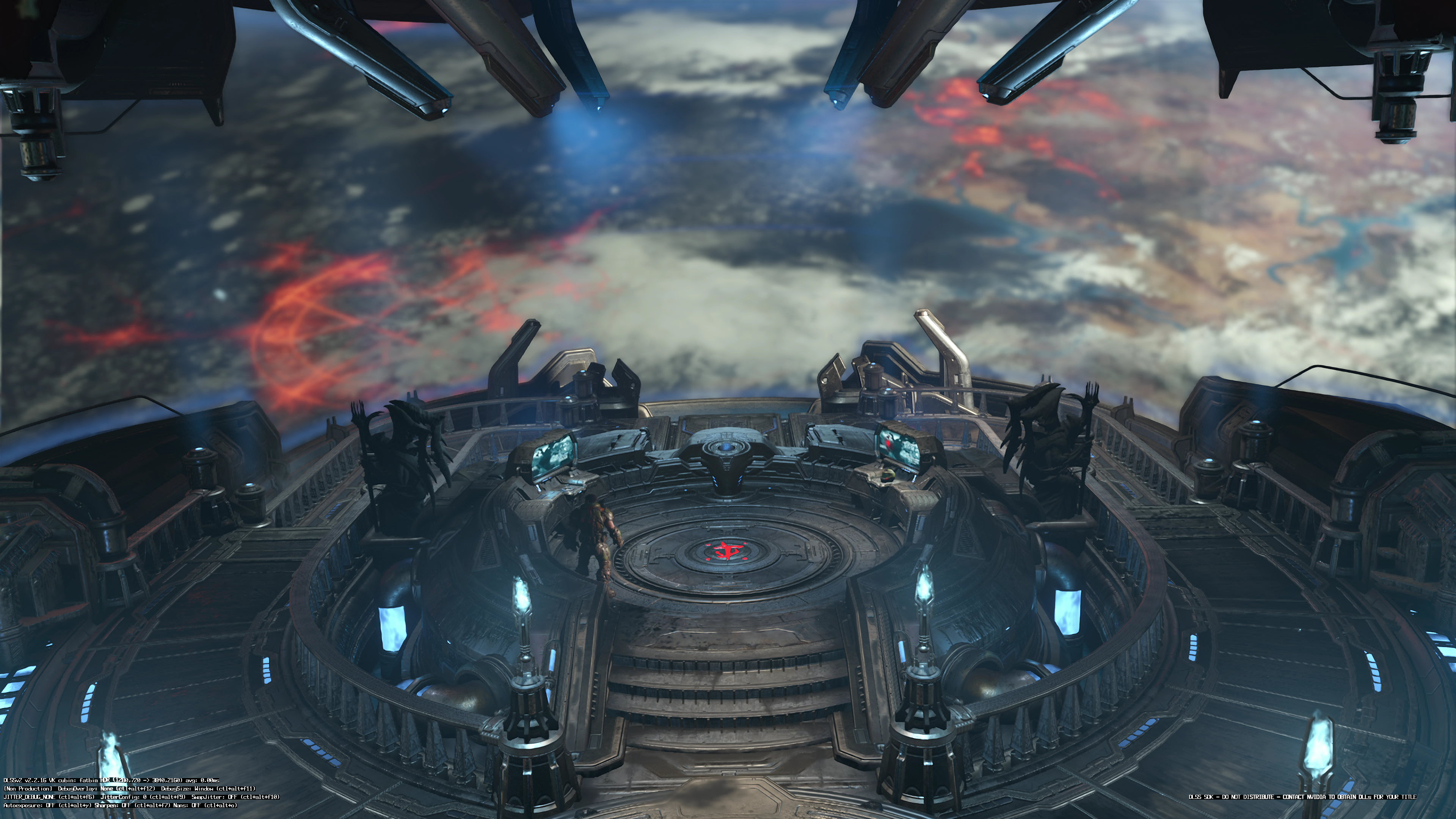

First of all, we need to look at the plausibility of an actual DLSS-capable processor ending up in a mobile console. There at least, there are positive answers. Nvidia’s latest Tegra SoC (system on chip) is codenamed Orin, it’s based on the latest Ampere architecture and it’s primarily designed for the automotive industry which presents our first problem - it has a 45W power budget, in a world where the debut Switch hit a maximum of 15W, with 10-11W likely used by the main processor itself (and probably half of that in mobile mode). There is a solution though: there’s an ultra low power rendition of Orin running at just 5W by default, but should easily scale up with more power consumption and more performance when docked. Even so, despite integrating the tensor cores required to make DLSS a reality, we’re still looking at a tiny power budget - and AI upscaling is not ‘free’, so the new step in our experiments is to measure the computational load of DLSS. You’ll see in the video how we did this but the method of calculation is pretty straightforward. Using Doom Eternal as a base, we used an RTX 2060 to measure the time taken to process DLSS vs native resolution rendering and came to the conclusion that the render time cost of the process is 1.9ms. The RTX 2060 has around 5.5x the machine learning capability of the Orin chip operating at 10W, so assuming linear scaling, the PC iteration of DLSS would require a substantial 10.5ms of processing time on a prospective Switch Pro. In a world where Doom Eternal targets 16.7ms per frame, that’s simply too high. However, for a 30fps game with a 33.3ms render budget, it’s very, very viable. It’s important to stress that our measurements on DLSS’s cost are based on comparing inputs and outputs and we don’t have access to all of the inner workings so the calculations are approximate, but it gives you some idea of the viability of the technology for a mobile platform. And there are a whole bunch of additional variables we need to consider. For a start, we are assuming a 4K output. There’s nothing to stop a developer using DLSS to turn that 720p mobile image into a 1440p DLSS output, then using the GPU scaler to deliver the final 4K output. In this scenario, the 10.5ms cost of DLSS in a mobile Nvidia chip drops to just 5.2ms. There would be quality loss, of course, but it may suit some games or certain visual content. Of course, we are basing our entire testing here on a PC implementation of DLSS and it’s definitely not beyond the realms of possibility that Nvidia would optimise the technology for a console experience - remember that the company even created its own low-level graphics API just for Switch. That’s commitment. But beyond these variables and our tests, there is actually something just as important, if not more so: can DLSS actually look decent upscaling a 720p image to 2160p? Hopefully, the screenshots on this page will illustrate precisely why DLSS is a potential game-changer for a prospective next-gen Switch and how an image rendered for a 720p mobile screen can actually transform into a perfectly viable living room presentation. DLSS not only upscales, but it anti-aliases the image in the process - a two-in-one solution, if you will. By combining information from prior frames with motion vectors that tell the algorithm where pixels are likely to move and informing all of that with deep learning, the effect is transformative. Does a 4K DLSS image compare favourably with a native 4K presentation? In the PC space, the performance mode uses a native 1080p input for scaling, rising to the quality mode at 1440p. Scaling from 720p means a lot less data to work with, meaning more inaccuracies and artefacts in the output image - but the point is that a prospective next-gen Switch does not need to deliver native 4K quality. The criteria for success is very different when comparing a PC experience viewed at close range by users demanding a high-end experience to a more mainstream console gamer watching the action play out at distance from a living room flat panel. The new Switch doesn’t need to deliver 4K precision, it simply needs to deliver an image that looks good on a typical modern living room TV. Beyond DLSS itself, obviously there’s a lot of theory-crafting in this article. For starters, why would we assume that Nintendo would target a 720p display for a next-gen Switch? From our perspective, it’s the best balance between GPU power and pixel density - and it’s no mistake that Valve has targeted a similarly dense display with its own 800p screen for Steam Deck. Moving from a target 720p to 1080p basically means that a big chunk of the generational leap from Switch to its successor would be spent on more pixels, as opposed to higher quality pixels. The next big assumption is that Nintendo will stick with Nvidia for the next-gen Switch (which is basically a no-brainer at this point - if only for compatibility’s sake) and that the firm will actually use the Orin chip, or a variation of it. On the latter point, one of the most accurate leakers around - kopite7kimi seems pretty convinced. Even so, our calculations for DLSS in a new Switch are based on a circa 10W power budget - and there’s absolutely nothing to stop Nintendo actually pushing further than that, given adequate cooling. But the point of this testing was basically two-fold: firstly, to figure out whether a mobile Nvidia chip based on the latest architecture could actually run DLSS - and the answer there is positive. It’s just that we need to be aware that although DLSS is based on hardware acceleration via tensor cores, it’s not a ‘free’ upscaler - there is a cost to using it. The question is whether that cost can be offset by increasing power and frequency when docked but the bottom line is that theoretically, DLSS is viable. The second question concerns quality. Even given a mere 720p resolution to handle, DLSS still produces good 4K results - astonishing and remarkable when put side-by-side with the input, even if it’s ’not as good as native’. The applications going forward are also mouth-watering - and not just for Nintendo consoles. Machine learning applications - including super-sampling - are very much a viable way forward for ‘Pro’ renditions of the PS5 and Xbox Series consoles too, if that’s the direction Sony and Microsoft want to take.